Now that nearly every team has played at least 10 games, one might think we have enough data to form an accurate assessment of any team based on what they have done on the court this season. Then why still have the influence of pre-season ratings in the current ratings? Because you actually don’t have enough data to work with. The opinion one had of a team before the games started being played still has some predictive value.

To illustrate this, I looked at the teams that had deviated the most from their preseason rating at this time last season. For instance, shown below are the ten teams that exceeded their preseason rating the most heading into the 2011 Christmas break, listed with their preseason rank and their ranking on December 24.

Pre 12/24 Wyoming 273 88 La Salle 217 69 Middle Tenn. 178 58 Mercer 247 116 W. Illinois 332 216 Wagner 206 80 Indiana 50 6 Virginia 88 24 Wisconsin 10 1 St. Louis 62 15

(To compare ratings differences, I’m using the Z-score of the Pythagorean winning percentage. If this means nothing to you, basically I’m accounting for the fact that a certain difference in Pyth values at the extremes of the ratings is equivalent to a larger difference in the middle of the ratings. Or Indiana’s move in the ratings represented the same improvement as Wagner’s even though the Hoosiers moved up fewer spots.)

If the preseason ratings are weighted properly, then there shouldn’t be a pattern to how these teams will trend from December 24 through the rest of the season. Some teams will see their numbers improve and some will see their ranking get worse. I’ve expanded the outlier list to 20, and added two columns – each team’s final ranking and the difference in that ranking from the December 24 edition.

Pre 12/24 Final Diff Wyoming 273 88 98 -10 La Salle 217 69 64 + 5 Middle Tenn 178 58 60 - 2 Mercer 247 116 91 +25 W Illinois 332 216 186 +30 Wagner 206 80 112 -32 Indiana 50 6 11 - 5 Virginia 88 24 33 - 9 Wisconsin 10 1 5 - 4 St. Louis 62 15 14 + 1 Georgia St. 182 76 71 + 5 Toledo 337 267 208 +59 Cal Poly 192 103 165 -62 Murray St. 110 43 45 - 2 Lamar 214 121 113 + 8 Ohio 111 48 62 -14 Denver 159 75 80 - 5 Illinois St. 181 98 81 +17 Georgetown 48 14 13 + 1 Oregon St. 124 61 85 -24

I suppose if you had some interest in Toledo you might have had a legitimate beef with the preseason influence on December 24. But the other teams didn’t move all that much, except for Cal Poly which moved downward significantly. If you average the ranking differences (I realize this isn’t the most scientific way to do this analysis), you get -0.9 per team. Pretty much unbiased.

For symmetry, let’s take a look at the teams that were outliers in the other direction. These programs underperformed their preseason ratings the most through December 24.

Pre 12/24 Final Diff Utah 140 316 303 +13 Wm & Mary 160 322 285 +37 Mt St Mary's 210 314 294 +20 Maryland 47 166 134 +32 UC Davis 251 330 326 + 4 Nicholls St. 276 338 332 + 6 Grambling 343 345 345 0 Towson 309 343 338 + 5 Monmouth 262 328 277 +51 UAB 72 175 133 +42 Rider 147 248 199 +49 N Illinois 315 340 330 +10 N Arizona 229 301 341 -40 Portland 130 229 278 -49 Jacksonville 151 239 228 +11 Binghamton 324 342 343 - 1 Arizona St. 70 158 223 -65 Kennesaw St. 272 323 313 +10 UC Riverside 224 291 284 + 7 Rhode Island 180 258 202 +56 VMI 200 268 254 +14

There’s more of a trend here. Each team’s ranking improved by 10 spots on average between Christmas and the final ratings. (The average for the top 10 was 21 spots, with every team but Grambling improving. And Grambling’s numbers did improve, but they were so far in last place they couldn’t catch #344.)

For comparison, let’s look at a world where preseason ratings aren’t used. They’re created for fun and discarded once games are played. The next set of tables looks at the same groups of teams, but the 12/24 column depicts a team’s ranking had there been no preseason influence on 12/24. First, the early-season improvers.

Pre 12/24 Final Diff Wyoming 273 43 98 -55 La Salle 217 62 64 - 2 Middle Tenn 178 44 60 -16 Mercer 247 82 91 - 9 W Illinois 332 122 186 -64 Wagner 206 58 112 -54 Indiana 50 6 11 - 5 Virginia 88 15 33 -18 Wisconsin 10 1 5 - 4 St. Louis 62 13 14 - 1 Georgia St. 182 73 71 + 2 Toledo 337 205 208 - 3 Cal Poly 192 72 165 -93 Murray St. 110 30 45 -15 Lamar 214 88 113 -25 Ohio 111 33 62 -29 Denver 159 50 80 -30 Illinois St. 181 75 81 - 6 Georgetown 48 11 13 - 2 Oregon St. 124 46 85 -39

Where the average ranking decline in the world influenced by preseason ratings was about one spot, this group drops by an average of 23 spots. Clearly, the lack of preseason influence would cause a bias. The opposite effect is observed with the decliners…

Pre 12/24 Final Diff Utah 140 330 303 +27 Wm & Mary 160 340 285 +55 Mt St Mary's 210 331 294 +37 Maryland 47 236 134 +102 UC Davis 251 335 326 + 9 Nicholls St. 276 338 332 + 6 Grambling 343 345 345 0 Towson 309 343 338 + 5 Monmouth 262 336 277 +59 UAB 72 201 133 +68 Rider 147 276 199 +77 N Illinois 315 341 330 +11 N Arizona 229 321 341 -20 Portland 130 252 278 -26 Jacksonville 151 261 228 +33 Binghamton 324 344 343 + 1 Arizona St. 70 188 223 -35 Kennesaw St. 272 324 313 +11 UC Riverside 224 313 284 +29 Rhode Island 180 279 202 +77 VMI 200 292 254 +38

The average improvement under the preseason-weighting scheme was 10 spots, but in a no-preseason scheme it’s 27 spots. Without preseason influence at this time of year, you can be nearly certain that a team that has overachieved relative to the initial ratings would be overrated. Likewise, a team that has dramatically underachieved would be almost certain to see its rating improve. That is to say, the ratings would be biased without preseason influence.

And this is because a dozen games are not enough to get an accurate picture on a lot of teams, especially when most of those games involve large amounts of garbage time. That’s not to say there isn’t a lot of value in the games that have been played. The fact that the preseason ratings are only given 2-3 games worth of weight at this point is an indication of that. Teams that have deviated substantially from their preseason ratings are almost surely not going to revert to that preseason prediction. But what’s nearly as certain is that a team’s true level of play is closer to their preseason prediction than their performance-to-date suggests.

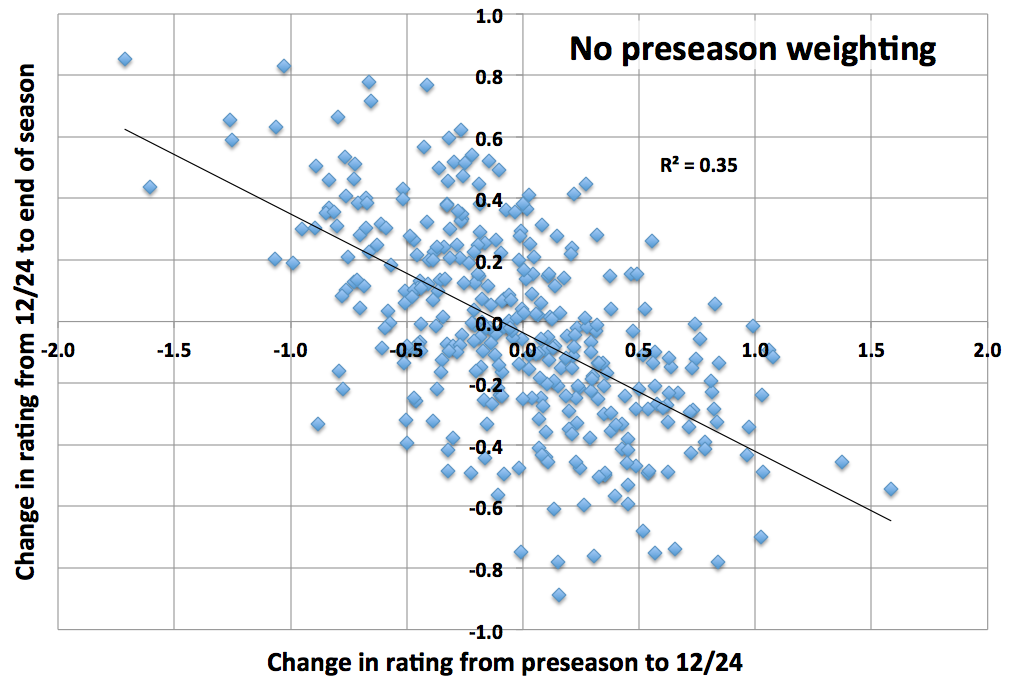

If you’ve made it this far, you’ve earned some bonus visuals. So let’s take a look at how the ratings changed last season in the entire D-I population, comparing change from the beginning of the season to Christmas and change from Christmas to the end of the season (using Z-score).

The plot on the top is without preseason ratings and the plot on the bottom is under the existing system. Notice that without preseason ratings, the change between the beginning of the season and Christmas is correlated with the change between Christmas and the end of season. While in the case with pre-season ratings, the two changes are almost uncorrelated, as they should be in an unbiased system.

Another conclusion that can be drawn from these plots is that the system would be more volatile without the influence of preseason ratings. Changes after December 24 are greater in the plot on the top than the one on the bottom. This begs the question: How long should preseason influence last? Based on Nate Silver’s findings, there’s some strong evidence that it would improve predictions to include one or two games worth of preseason expectation through the end of the season instead of having them expire in late January. The plot on the bottom suggests there’s still enough rebound that the Christmastime ratings should include more preseason juice. But it appears the mix is close enough to being right – certainly much closer than not including preseason ratings at all – to not lose any sleep over.